Available with Image Analyst license.

Remote-sensing imagery is collected by a satellite, an aircraft, or a drone platform. All remote-sensing imagery is not the same, and it is generally collected to meet project requirements. The conditions of each project drive the imagery collection and processing requirements, which distinguish types of imagery into categories. The first level of distinction is the information content contained in imagery, and the geometric characteristics of imagery. Whether imagery is being visually analyzed or processed using remote-sensing image processing techniques, factors such as minimum mapping unit, spectral band width and placement, and geopositional accuracy, determine the suitability of particular types of imagery for your project’s goals and requirements.

Information content in imagery

The nature of the information contained in imagery depends primarily on three types of resolution: spatial, spectral, and temporal, which all affect the minimum mapping unit of a project.

Spatial resolution

Spatial resolution refers to the size of the pixels (picture elements) on the ground comprising the image, and is often referred to as ground sample distance (GSD). This is a function of both the sensor capability and the flying height of the sensor. GSD determines the level of spatial detail and types of features visible in the image. Generally, the smaller the pixel size, the greater the detail contained in the image. Small pixel sizes, such as 3 inch or 10 centimeter, result in very large files that can be difficult to process, store, and manage. Feature extraction techniques may work well at one GSD but fail at other GSDs, which also needs to be considered. Small pixel size is not always suitable for a particular project, such as land-cover classification for a state, province, or country. Spatial resolution is closely associated with a project’s minimum mapping unit, since a sufficient number of pixels are needed to identify a feature.

Spectral resolution

Spectral resolution is determined by the sensor and measures portions of the electromagnetic spectrum. The spectral characteristics of a sensor are composed of the number of imaging bands, wavelengths of the bands, spectral width of the bands, and the signal-to-noise ratio. Spectral resolution refers to the ability of the sensor to measure the spectral signature of a feature. These characteristics determine the type of features and phenomena that can be detected and mapped. Multispectral sensors collect four or more nonoverlapping bands that are fairly wide (50 to 80 nanometers) and placed to distinguish broad categories of features such as vegetation, soil, water, and human-made features. Hyperspectral sensors collect many (more than 100) narrow bands (5 to 10 nanometers) strategically placed to sense specific portions of a feature’s spectral signature. Hyperspectral sensors provide more detailed information, such as vegetation species, water quality, or characteristics of a material. Hyperspectral sensors are less common than multispectral sensors because they are usually deployed in aircraft and are more expensive, and they require specialized expertise to operate, process, and analyze the data.

Many sensors operate in the nonvisible portion of the spectrum, such as thermal infrared sensors. These are electro-optical but collect a portion of the spectrum that represents emitted heat rather than reflected solar energy. Geometrically, these sensors are similar to other electro-optic imagery, but they sense areas of the electromagnetic spectrum not visible to the eye.

There are also active sensors, such as radar, that provide their own illumination. These sensors operate with frequencies that are much longer than their electro-optic counterparts and provide imagery with geometries that also depart from perspective geometry.

Temporal resolution

Temporal resolution refers to the frequency of coverage of a geographic location, usually by a class of satellite sensor platforms. It is determined by number of satellite overpasses, orbital mechanics, and the agility of the sensor platform. For satellites that point vertically down at the earth, such as the Landsat series, temporal resolution is 16 days to cover the same geographic location. Exceptions are geostationary satellites, such as weather satellites, with coarse spatial resolution. Higher-resolution satellites have higher temporal frequency due to the deployment of multiple similar sensor systems in complementary orbits, and the ability to point the sensor off-nadir from vertical viewing. While off-nadir viewing results in higher temporal resolution, perhaps as often as daily coverage of the same geographic location, the imagery is oblique and has a larger GSD.

With the advent of imaging drone technology, temporal resolution has increased dramatically. Drones can monitor a location daily, several times a day, or continuously. This technology has enabled many types of monitoring applications that were previously not possible.

Note:

The extent of coverage of a remote-sensing imaging system is another important factor when considering appropriate imagery for a project. Imaging extent refers to the footprint, or coverage on the ground, of the sensor. Satellite sensors have a very large footprint, generally 10 to 200 kilometers or more, while aerial sensors have a considerably smaller footprint of many hundreds of meters per image, depending on the sensor and flying height. Drones have a small footprint of tens of meters.

Geometric characteristics of imagery

For GIS purposes, imagery is distinguished into six categories based on geometry. Each distinction affects the usefulness of the image for certain applications or practices. It also affects the way to handle imagery in the application to achieve optimal results. Some types of imagery are best handled in an image centric fashion and some should be handled in a map centric mode. For example, vertical imagery and basemap imagery should be handled in a map centric manner. Oblique and motion imagery are generally handled in an image centric mode, possibly with the option to switch to map mode depending on the obliquity. Stereo imagery requires handling in image centric mode in a dedicated stereo view. Finally, pictures do not have adequate geometric positioning information, and should be handled in a separate pop-up window. Each type of imagery has uses and limitations driven by the geometry:

- Imagery basemap—A processed, orthorectified image or collection of image tiles that has the integrity of a map and is used for a reference. Image basemaps are commonly used for a backdrop to GIS data for context and are often color balanced for optimum visual appeal. Thus, they may not be suitable for automated feature extraction.

- Vertical imagery—Imagery that is collected for mapping purposes and has associated geopositioning metadata. Its primary purpose is to produce geometrically accurate basemaps, as well as automated or semiautomated feature extraction.

- Oblique imagery—Imagery that is collected with off-nadir look angles that may make it unsuitable for precise mapping, but very useful for visual interpretation and situational awareness. Imagery used in monitoring applications is often oblique. It has geopositioning metadata.

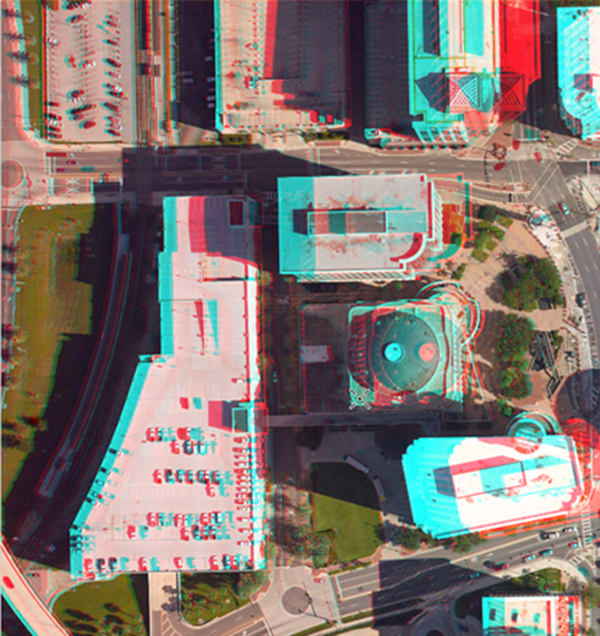

- Stereo imagery—Overlapping imagery that is collected specifically for stereo exploitation and has precise geopositioning metadata. Stereo imagery is used primarily for accurate 3D feature compilation for creating and updating land base and GIS layers.

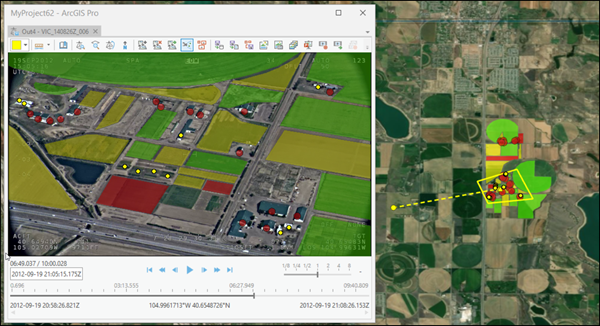

- Motion imagery—Multiframe imagery that captures motion at 1 to 120 Hertz, such as video data. This imagery usually has georeferencing metadata embedded into the digital video stream.

- Picture—An image that has no or inadequate geopositioning metadata. It may or may not have intensity information that has any degree of radiometric integrity. This imagery is often of historical nature, or in support of ground reference surveys.

Basemap imagery

This satellite image map of San Francisco is from the Esri imagery basemap. It is made up of multiple orthorectified images that have been color balanced and mosaicked together along seam lines. This is most evident when looking at the bridge in the lower right corner of the scene. The bridge is disjointed at the edge of the water and land. The displacement is caused by relief distortion in the original image that was not accounted for in the elevation matrix used for rectification. Basemaps are very accurate, but only for features that lie on the bare earth surface. Buildings, bridges, and other tall features are only accurate at their base, where they meet the ground. The radiometry of the basemap is also heavily modified to provide an aesthetically appealing image. Caution needs to be used when attempting to extract feature data from basemaps. Basemaps generally serve as a backdrop to GIS layers, and if current, are an excellent source for manual extraction of features for land base creation and update. However, it is not unusual for basemaps to be out of date due to the time and expense required to create them.

Vertical imagery

Vertical imagery is usually collected for mapping purposes. It provides clear views of the terrain, has excellent geometric integrity, and can easily be orthorectified. If vertical imagery has not been overtly color balanced, it is very useful for automated feature classification and extracting based on spectral characteristics. Vertical imagery also has uniform pixel size or GSD across the image, and uniform scale. Vertical imagery is often the data source for basemaps.

Oblique imagery

Oblique imagery is often used for situational awareness and analysis. The oblique nature makes it easier to collect features, and often offers more intuitive views of the region and features of interest. Depending on the sensor and the distance to the ground, the scale and GSD can vary dramatically across the image. Oblique imagery is best viewed and analyzed in perspective mode in the image space analysis application in Image Analyst.

Stereo imagery

Stereo imagery is collected for several purposes. It is used most often for the extraction of terrain models, building footprints and roofs, and vegetation management such as forestry. It is primarily used for 3D feature extraction and the identification and interpretation of features that are difficult or impossible to see monoscopically, such as the ground beneath tree canopy. Stereo imagery is viewed, analyzed, and used for collection of 3D features in the Stereo Mapping application in Image Analyst.

Motion imagery

FMV uses the metadata to seamlessly convert coordinates between the video image space and map space, similar to the way that the Image Coordinate System (ICS) transforms still imagery. This provides the foundation for interpreting video data in the full context of all other geospatial data and information within your GIS. For example, you can view the video frame footprint, frame center, and position of the imaging platform on the map view as the video plays, together with your GIS layers such as buildings with IDs, geofences, and other pertinent information.

Summary

It is important to understand the objectives and requirements of your projects in order to acquire the appropriate type of imagery. The suitability of a particular type of imagery depends on the information content and the geometric characteristics of the imagery. ArcGIS Pro handles the different types of imagery in a manner to best exploit both the information contained in the imagery and the geometry of the imagery in viewing, analysis, and exploitation environments.

The characteristics of imagery, its suitability for a general type of application, and how image types are handled in ArcGIS Image Analyst are summarized in the table below.

| Image type | Use | Prevalence | ArcGIS Pro view |

|---|---|---|---|

Basemap imagery | Context | Moderate | Map view |

Vertical imagery | Map creation and update | Moderate | Map view |

Oblique imagery | Situation awareness | High | Map view in Perspective mode |

Stereo imagery | Precise mapping in 3D | Low to moderate | Stereo map view |

Motion Imagery | Situation awareness | Low to moderate | Video player linked to map view |

Picture | Reference | Low | Pop-up window |

Properly addressing the suitability of different types of imagery appropriate for different types of applications will optimize results and satisfy multiple project requirements in operational, academic, and research environments.