| Label | Explanation | Data Type |

Input Features

| The input point feature class to be aggregated into space-time bins. | Feature Layer |

Output Space Time Cube

| The output netCDF data cube that will be created to contain counts and summaries of the input feature point data. | File |

Time Field

|

The field containing the date and time (timestamp) for each point. This field must be of type Date. | Field |

Template Cube (Optional) | A reference space-time cube used to define the Output Space Time Cube extent of analysis, bin dimensions, and bin alignment. Time Step Interval, Distance Interval, and Reference Time information are also obtained from the template cube. This template cube must be a netCDF (.nc) file that has been created using this tool. A space-time cube created by aggregating into Defined locations cannot be used as a Template Cube. | File |

Time Step Interval

(Optional) | The number of seconds, minutes, hours, days, weeks, or years that will represent a single time step. All points within the same Time Step Interval and Distance Interval will be aggregated. (When a Template Cube is provided, this parameter is ignored, and the Time Step Interval value is obtained from the template cube.) | Time Unit |

Time Step Alignment

(Optional) | Defines how aggregation will occur based on a given Time Step Interval. If a Template Cube is provided, the Time Step Alignment associated with the Template Cube overrides this parameter setting and the Time Step Alignment of the Template Cube is used.

| String |

Reference Time

(Optional) | The date/time to use to align the time-step intervals. If you want to bin your data weekly from Monday to Sunday, for example, you could set a reference time of Sunday at midnight to ensure bins break between Sunday and Monday at midnight. (When a Template Cube is provided, this parameter is disabled and the Reference Time is based on the Template Cube.) | Date |

Distance Interval

(Optional) | The size of the bins used to aggregate the Input Features. All points that fall within the same Distance Interval and Time Step Interval will be aggregated. When aggregating into a hexagon grid, this distance is used as the height to construct the hexagon polygons. (When a Template Cube is provided, this parameter is disabled and the distance interval value will be based on the Template Cube.) | Linear Unit |

Summary Fields

| The numeric field containing attribute values used to calculate the specified statistic when aggregating into a space-time cube. Multiple statistic and field combinations can be specified. Null values in any of the fields specified will result in that feature being dropped from the output cube. If there are null values present in your input features, it is highly recommended that you run the Fill Missing Values tool before creating a space-time cube. Available statistic types are:

Available fill types are:

Note:Null values present in any of the summary field records will result in those features being excluded from the output cube. If there are null values present in your Input Features, it is highly recommended that you run the Fill Missing Values tool first. If, after running the Fill Missing Values tool, there are still null values present and having the count of points in each bin is part of your analysis strategy, you may want to consider creating separate cubes, one for the count (without Summary Fields) and one for Summary Fields. If the set of null values is different for each summary field, you may also consider creating a separate cube for each summary field. | Value Table |

Aggregation Shape Type

(Optional) | The shape of the polygon mesh into which the input feature point data will be aggregated.

| String |

Defined Polygon Locations

(Optional) | The polygon features into which the input point data will be aggregated. These can represent county boundaries, police beats, or sales territories for example. | Feature Layer |

Location ID

(Optional) | The field containing the ID number for each unique location. | Field |

Summary

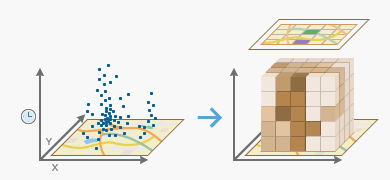

Summarizes a set of points into a netCDF data structure by aggregating them into space-time bins. Within each bin, the points are counted, and specified attributes are aggregated. For all bin locations, the trend for counts and summary field values are evaluated.

Learn more about how Create Space Time Cube By Aggregating Points works

Illustration

Usage

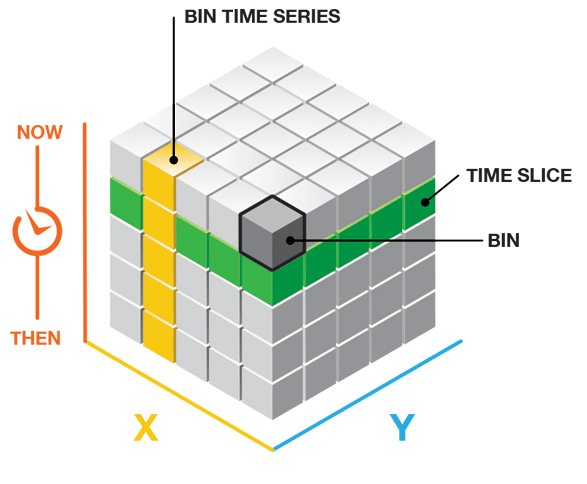

This tool aggregates your point Input Features into space-time bins. The data structure it creates can be thought of as a three-dimensional cube made up of space-time bins with the x and y dimensions representing space and the t dimension representing time.

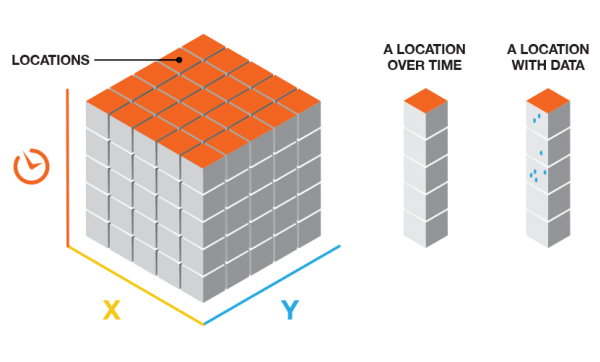

Every bin has a fixed position in space (x,y) and in time (t). Bins covering the same (x,y) area share the same location ID. Bins encompassing the same duration share the same time-step ID.

Each bin in the space-time cube has a LOCATION_ID, time_step_ID, COUNT value, and values for any Summary Fields that were aggregated when the cube was created. Bins associated with the same physical location will share the same location ID and together will represent a time series. Bins associated with the same time-step interval will share the same time-step ID and together will comprise a time slice. The count value for each bin reflects the number of points that occurred at the associated location within the associated time-step interval.

The Input Features should be points, such as crime or fire events, disease incidents, customer sales data, or traffic accidents. Each point should have a date associated with it. The field containing the event timestamp must be of type Date. The tool requires a minimum of 60 points and a variety of timestamps. The tool will fail if the parameters specified result in a cube with more than two billion bins.

This tool requires projected data to accurately measure distances.

Output from this tool is a netCDF representation of your input points as well as messages summarizing cube characteristics. Messages are written at the bottom of the Geoprocessing pane during tool execution. You can access the messages by hovering over the progress bar, clicking the pop-out button

, or expanding the messages section in the Geoprocessing pane. You can also access the messages for a previous run of the Create Space Time Cube By Aggregating Points tool via the Geoprocessing History. You would use the netCDF file as input to other tools such as the Emerging Hot Spot Analysis tool or the Local Outlier Analysis tool. See Visualizing the Space Time Cube for strategies allowing you to look at cube contents.

, or expanding the messages section in the Geoprocessing pane. You can also access the messages for a previous run of the Create Space Time Cube By Aggregating Points tool via the Geoprocessing History. You would use the netCDF file as input to other tools such as the Emerging Hot Spot Analysis tool or the Local Outlier Analysis tool. See Visualizing the Space Time Cube for strategies allowing you to look at cube contents.Select a field of type Date for the Time Field parameter. This field should contain the timestamp associated with each point feature.

The Time Step Interval defines how you want to partition your aggregated points across time. You might decide to aggregate points using one-day, one-week, or one-year intervals for example. Time-step intervals are always fixed durations, and the tool requires a minimum of 10 time steps. If you do not provide a Time Step Interval value, the tool will calculate one for you. See Learn more about how the Create Space Time Cube By Aggregating Points tool works for details on how default time-step intervals are computed. Valid time-step interval units are Years, Months, Days, Hours, Minutes, and Seconds.

Note:

While a number of time units appear in the Time Step Interval drop-down list, the tool only supports Years, Months, Weeks, Days, Hours, Minutes, and Seconds.

If your space-time cube could not be created, the tool may have been unable to structure the input data you provided into ten time-step intervals. If you receive an error message running this tool, examine the timestamps of the input points to make sure they include a range of values. The range of values must span at least ten seconds as this is the smallest time increment that the tool will take. Ten time-step intervals are required by the Mann-Kendall statistic.

When creating a space-time cube with incident data, depending on the Time Step Interval that you choose, it is possible to create a bin at the beginning or end of the cube that does not have data across the entire span of time. For instance, if you choose a 1 month Time Step Interval, and your data does not break up evenly into 1 month intervals, there will be a time step at either the beginning or end that does not have data over its entire span. This can bias your results because it will appear that the temporally biased time step has significantly less points than other time steps, which is in fact an artificial result of the aggregation scheme. The messages indicate whether there is temporal bias in the first or last time step. One solution is to create a selection set of your data so that it does fall evenly within the desired Time Step Interval.

It is not uncommon for a dataset to have a regularly spaced temporal distribution. For instance, you might have yearly data that all falls on January 1st of each year, or monthly data that is all timestamped the first of each month. This kind of data is often referred to as panel data. With panel data, temporal bias calculations will often show very high percentages. This is to be expected, as each bin will only cover one particular time unit in the given time step. For instance, if you chose a 1 year Time Step Interval and your data fell on January 1st of each year, each bin would only cover one day out of the year. This is perfectly acceptable since it applies to each bin. Temporal bias becomes an issue when it is only present for certain bins due to bin creation parameters rather than true data distribution. It is important to evaluate the temporal bias in terms of the expected coverage in each bin based on your data's distribution.

The temporal bias in the output report is calculated as the percentage of the time span that has no data present. For example, an empty bin would have 100 percent temporal bias. A bin with a 1 month time span and an end Time Step Alignment that only has data for the second two weeks of the first time step would have a 50 percent first time step temporal bias. A bin with a 1 month time span and a start Time Step Alignment that only has data for the first two weeks of the last time step would have a 50 percent last time step temporal bias.

Once you create a space-time cube, the spatial extent of the cube can never be extended. If further analysis of the space-time cube will involve the use of a study area (such as a Polygon Analysis Mask in the Emerging Hot Spot Analysis tool), you will want to ensure that the Polygon Analysis Mask does not extend beyond the extent of the Input Features when you create your cube. Setting the study area polygon(s) that you will use in future analysis as the Extent environment setting when you create the cube will ensure that the extent of the cube is as large as you need it to be at the beginning of your analysis.

Legacy:

The method with which the Create Space Time Cube By Aggregating Points tool creates the extent of the space-time cube has changed in the releases of ArcGIS Pro 1.3 and ArcMap 10.5. You can learn more about this change in Space-time cube bias adjustment. The new bias adjustment will provide a better result, but if—for any reason—you need to re-create the cube with the previous extent, you can specify the extent through the Extent environment setting.

You can create a Template Cube that can be used each time you run your analysis, especially if you want to compare data for a series of time periods. By providing the same template cube, you ensure the extent of your analysis, bin size, time-step interval, reference time, and time-step alignment are always consistent.

If you provide a Template Cube, input points that fall outside of the template cube extent will be excluded from analysis. Also, if the spatial reference associated with the input point features is different from the spatial reference associated with the template cube, the tool will project the Input Features to match the template cube before beginning the aggregation process. The spatial reference associated with the template cube will override Output Coordinate System settings as well. In addition, the Template Cube, when specified, will determine the processing extent used, even if you specify a different processing extent. See How Creating a Space Time Cube works for more information.

The Reference Time can be a date and time value or solely a date value; it cannot be solely a time value. The expected format is determined by the computer's regional time settings.

There are a few options regarding how your points are aggregated spatially using the Aggregation Shape Type parameter. If you want to aggregate to a regularly shaped grid, you can choose either a fishnet or hexagon shape. While fishnet grids are the more common aggregation shape used, hexagons may be a better option for certain analyses. If you have boundaries or locations that make sense for your analysis (such as census blocks or police beats), you can also use those to aggregate using the Defined Locations option.

Note:

If your Defined Locations are stored in a file geodatabase and contain true curves (stored as arcs as opposed to stored with vertices), polygon shapes will be distorted when stored in the space-time cube. To check if your Defined Locations contain true curves, run the Check Geometry tool with the OGC Validation Method. If you receive an error message stating that the selected option does not support non-linear segments, then true curves exist in your dataset and may be eliminated and replaced with vertices by using the Densify tool with the Angle Densification Method before creating the space-time cube.

Because a grid cube is always rectangular even if your point data is not, some locations will have point counts of zero for all time steps. For many analyses, only locations with data—with at least one point count greater than 1 for at least one time step—will be included in the analysis.

-

When creating a cube aggregating into defined locations, all user-provided defined locations will be included, even those that have no points at any time step.

The Distance Interval specifies how large the space-time bins should be. The bins are used to aggregate your point data. You may decide to make each fishnet bin 50 meters by 50 meters for example. If you are aggregating into hexagons, the Distance Interval is the height of each hexagon, and the width of the resulting hexagons will be 2 times the height divided by the square root of 3. Unless a Template Cube is specified, the bin in the upper-left corner of the cube will be centered on the upper-left corner of the spatial extent for your Input Features.

- You will want to select a Distance Interval that makes sense for your analysis. You should find the balance between making your distance interval too large and losing the underlying patterns in your point data, and making your distance interval too small so you end up with a cube filled with zero counts. If you do not provide a Distance Interval value, the tool will calculate one for you. See How Create Space Time Cube By Aggregating Points works for details on how default distance intervals are computed. The distance interval units supported are Kilometers, Meters, Miles, and Feet.

The trend analysis performed on the aggregated count data and summary field values is based on the Mann-Kendall statistic.

The following statistical operations are available for the aggregation of attributes with this tool: Sum, Mean, Minimum, Maximum, Standard Deviation, and Median.

When filling empty bins with SPATIAL_NEIGHBORS, a Queens Case Contiguity is used (contiguity based on edges and nodes) of the 2nd order (includes neighbors and neighbors of neighbors). A minimum of 4 spatial neighbors are required to fill the empty bin using this option.

When filling empty bins with SPACE_TIME_NEIGHBORS, a Queens Case Contiguity is used (contiguity based on edges and nodes) of the 2nd order (includes neighbors and neighbors of neighbors). Additionally, temporal neighbors are used for each of those bins found to be spatial neighbors by going backward and forward 2 time steps. A minimum of 13 space time neighbors are required to fill the empty bin using this option.

When filling empty bins with a TEMPORAL_TREND, the first two time periods and last two time periods at a given location must have values in their bins to interpolate values at other time periods for that location.

The TEMPORAL_TREND fill type uses the Interpolated Univariate Spline method in the SciPy Interpolation package.

Null values present in any of the summary field records will result in those features being excluded from the output cube. If there are null values present in your Input Features, it is highly recommended that you run the Fill Missing Values tool first. If, after running the Fill Missing Values tool, there are still null values present and having the count of points in each bin is part of your analysis strategy, you may want to consider creating separate cubes, one for the count (without Summary Fields) and one for Summary Fields. If the set of null values is different for each summary field, you may also consider creating a separate cube for each summary field.

This tool can take advantage of the increased performance available in systems that use multiple CPUs (or multi-core CPUs). The tool will default to run using 50% of the processors available; however, the number of CPUs used can be increased or decreased using the Parallel Processing Factor environment. The increased processing speed is most noticeable when creating larger space-time cubes.

Parameters

arcpy.stpm.CreateSpaceTimeCube(in_features, output_cube, time_field, {template_cube}, {time_step_interval}, {time_step_alignment}, {reference_time}, {distance_interval}, summary_fields, {aggregation_shape_type}, {defined_polygon_locations}, {location_id})| Name | Explanation | Data Type |

in_features | The input point feature class to be aggregated into space-time bins. | Feature Layer |

output_cube | The output netCDF data cube that will be created to contain counts and summaries of the input feature point data. | File |

time_field |

The field containing the date and time (timestamp) for each point. This field must be of type Date. | Field |

template_cube (Optional) | A reference space-time cube used to define the output_cube extent of analysis, bin dimensions, and bin alignment. The time_step_interval, distance_interval, and reference_time values are also obtained from the template cube. This template cube must be a netCDF (.nc) file that has been created using this tool. A space-time cube created by aggregating into DEFINED_LOCATIONS cannot be used as a template_cube. | File |

time_step_interval (Optional) | The number of seconds, minutes, hours, days, weeks, or years that will represent a single time step. All points within the same time_step_interval and distance_interval will be aggregated. (When a template_cube is provided, this parameter is ignored, and the time_step_interval value is obtained from the template cube). Examples of valid entries for this parameter are 1 Weeks, 13 Days, or 1 Months. | Time Unit |

time_step_alignment (Optional) | Defines how aggregation will occur based on a given time_step_interval. If a template_cube is provided, the time_step_alignment associated with the template_cube overrides this parameter setting and the time_step_alignment of the template_cube is used.

| String |

reference_time (Optional) | The date/time to use to align the time-step intervals. If you want to bin your data weekly from Monday to Sunday, for example, you could set a reference time of Sunday at midnight to ensure bins break between Sunday and Monday at midnight. (When a template_cube is provided, this parameter is ignored and the reference_time is based on the template_cube.) | Date |

distance_interval (Optional) | The size of the bins used to aggregate the in_features. All points that fall within the same distance_interval and time_step_interval will be aggregated. When aggregating into a hexagon grid, this distance is used as the height to construct the hexagon polygons. (When a template_cube is provided, this parameter is ignored and the distance interval value will be based on the template_cube.) | Linear Unit |

summary_fields [[Field, Statistic, Fill Empty Bins with],...] | The numeric field containing attribute values used to calculate the specified statistic when aggregating into a space-time cube. Multiple statistic and field combinations can be specified. Null values in any of the fields specified will result in that feature being dropped from the output cube. If there are null values present in your input features, it is highly recommended that you run the Fill Missing Values tool before creating a space-time cube. Available statistic types are:

Available fill types are:

Note:Null values present in any of the summary field records will result in those features being excluded from the output cube. If there are null values present in your Input Features, it is highly recommended that you run the Fill Missing Values tool first. If, after running the Fill Missing Values tool, there are still null values present and having the count of points in each bin is part of your analysis strategy, you may want to consider creating separate cubes, one for the count (without Summary Fields) and one for Summary Fields. If the set of null values is different for each summary field, you may also consider creating a separate cube for each summary field. | Value Table |

aggregation_shape_type (Optional) | The shape of the polygon mesh into which the input feature point data will be aggregated.

| String |

defined_polygon_locations (Optional) | The polygon features into which the input point data will be aggregated. These can represent county boundaries, police beats, or sales territories for example. | Feature Layer |

location_id (Optional) | The field containing the ID number for each unique location. | Field |

Code sample

The following Python window script demonstrates how to use the CreateSpaceTimeCube tool.

arcpy.env.workspace = r"C:\STPM"

arcpy.CreateSpaceTimeCube_stpm("Homicides.shp", "Homicides.nc", "OccDate", "#", "3 Months",

"End time", "#", "3 Miles", "Property MEDIAN SPACETIME; Age STD ZEROS", "HEXAGON_GRID", "#", "#")The following stand-alone Python script demonstrates how to use the CreateSpaceTimeCube tool.

# Create Space Time Cube of homicide incidents in a metropolitan area

# Import system modules

import arcpy

# Set geoprocessor object property to overwrite existing output, by default

arcpy.env.overwriteOutput = True

# Local variables...

workspace = r"C:\STPM"

try:

# Set the current workspace (to avoid having to specify the full path to the feature

# classes each time)

arcpy.env.workspace = workspace

# Create Space Time Cube of homicide incident data with 3 months and 3 miles settings

# Also aggregate the median of property loss, no date predicted by space-time neighbors

# Also aggregate the standard deviation of the victim's age, fill the no-data with zeros

# Process: Create Space Time Cube By Aggregating Points

cube = arcpy.CreateSpaceTimeCube_stpm("Homicides.shp", "Homicides.nc", "MyDate", "#",

"3 Months", "End_time", "#", "3 Miles", "Property MEDIAN SPACETIME; Age STD ZEROS",

"HEXAGON_GRID", "#", "#")

# Create a polygon that defines where incidents are possible

# Process: Minimum Bounding Geometry of homicide incident data

arcpy.MinimumBoundingGeometry_management("Homicides.shp", "bounding.shp", "CONVEX_HULL",

"ALL", "#", "NO_MBG_FIELDS")

# Emerging Hot Spot Analysis of homicide incident cube using 5 Miles neighborhood

# distance and 2 neighborhood time step to detect hot spots

# Process: Emerging Hot Spot Analysis

cube = arcpy.EmergingHotSpotAnalysis_stpm("Homicides.nc", "COUNT", "EHS_Homicides.shp",

"5 Miles", 2, "bounding.shp")

except arcpy.ExecuteError:

# If any error occurred when running the tool, print the messages

print(arcpy.GetMessages(2))Environments

- Output Coordinate System

The spatial reference associated with the Template Cube, when specified, will override the Output Coordinate System environment setting.

- Extent

The processing extent of the Template Cube, when specified, will override the environment setting processing extent.

Licensing information

- Basic: Yes

- Standard: Yes

- Advanced: Yes